Variational Method#

Recall that in quantum mechanics, the ground state energy can be defined variationally as

To obtain an exact result, the minumum is taken over the full Hilbert space. But we can obtain an upper bound on the \(E_0\) by a “few” parameter ansatz \(\psi=\left|g_{1,}, g_{2}, \cdots\right\rangle\), where \(g_i\) are parameters. For example, we could approximate \(\psi\) by the Gaussian \(\langle r|g\rangle=e^{-\frac{1}{2} g r^{2}}\) with just one parameter \(g\). Then,

The \(|g\rangle\) obtained in this way is in this sense the “best” approximation to \(\left|E_{0}\right\rangle\) in the Hilbert subspace \(\mid \{ g\} \rangle\).

We can do something similar in statistical physics.

Given \(T, H\), we define the free-energy of an arbitrary distribution \(p\) by

where we’ve used the Gibbs entropy

Recall that the Boltzmann distribution \(\hat p\equiv \frac{1}{Z_{\beta}} e^{-\beta H}=e^{-\beta (H-F_0)}\) minimizes the functional \(F\) (Because \(\hat p\) maximizes the Gibbs entropy subject to \(\langle E\rangle\)). Therefore, we have the inequality

Given a few-parameter ansatz \(p_{\mu}(g)\), we can then define our best approximation as

and use \(p(g)\) to compute approximate observables.

Note that there is a simple way to see that () is true. First, the difference in approximate and true free energy is

The first term can be rewritten after noting that the structure of the true distribution, \(\hat p=e^{-\beta (H-F_0)}\), allows us to write \(\langle \hat p \rangle_{p} = -\beta (\langle H \rangle_{p} - F_0)\). Thus, we obtain

showing that the difference between the approximate and true free energy is proportional to the “KL” divergence

which is non-negative (see Shannon entropy lecture, eq. (2)).

It is convenient to parameterize \(p(g)\) as a Boltzmann distribution corresponding to a fictitious Hamiltonian \(H_g\),

Consider, for example, the generalized Ising Hamiltonian

where the notation \(\langle i, j\rangle\) indicates that sites \(i\) and \(j\) are in contact (i.e. they are nearest neighbors) and each pair is only counted once. We might then choose \(H_{g}=-g \sum_{i} \sigma_{i}\), where \(g\) is a parameter we will adjust. In terms of \(H_g\), we have

Defining \(\quad e^{-\beta F_{g}}\equiv Z_{g}= \sum_{\mu} e^{-\beta H_{g}(\mu)}\quad\left(F_{g} \neq F[p(g)] !\right)\), we have

The lower bound

is called the “Gibbs’s inequality”. It can be rewritten as

Let’s try this for

We need to compute \(Z_{g},\left\langle H_{g}\right\rangle_{g},\langle H\rangle_{g}\)

which depends on the mean magnetization \(m_g\) under the fictitious Hamiltonian,

And crucially, because under \(p(g)\) the different spins are uncorrelated,

we obtain for the \(p(g)\) averaged energy

where \(\zeta\) is the number of nearest neighbors each spin has - the so-called coordination number.

Putting it all together

Now, finally, we minimize (\(m_{g}^{\prime}=\partial_{g} m_{g}\)):

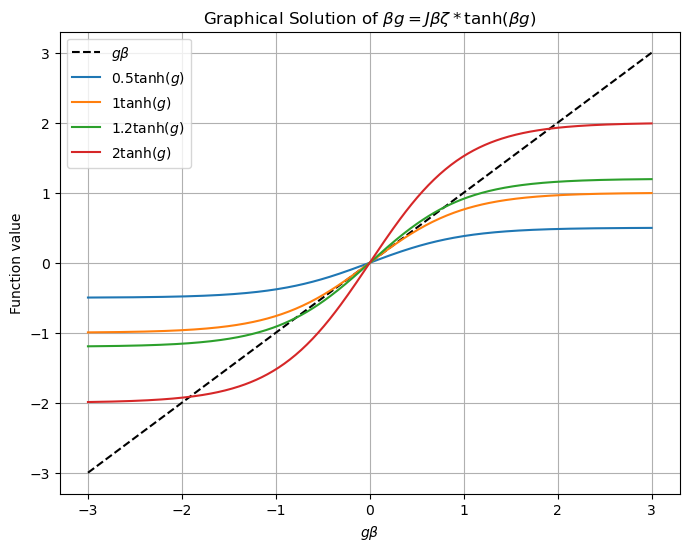

So, we obtain the self-consistency condition

which has a simple physical interpretation: in \(H=-J\sum_{\langle i, j\rangle} \sigma_{i} \sigma_{j}\), each \(\sigma_{i}\) sees “on average” a field \(J \zeta \langle \sigma_i\rangle_g=J \zeta m_g\) induced by its neighors - suggesting \(H\approx -J \zeta m_g\sum_i \sigma_i\). Since \(H_{g}=-g \sum_i \sigma_{i}\), the condition is \(g=J \zeta m_g\).

We can solve \(g=J \zeta \tanh (\beta g)\) analytically for small \(g\) :

Solution \(1: g=0 . \longrightarrow m_{g}=0\)

But for \(J \zeta \beta>1\),

Solution 2: \(\beta g= \pm \sqrt{3\left(1-\frac{1}{J \zeta \beta}\right)}\)

For \(J \zeta \beta>1\), it can be verified this is lower-F solution: symmetry breaking!

Graphical Solution of

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

# Define the parameters for multiple J values

J_values = [0.5, 1, 1.2, 2]

g_values = np.linspace(-3, 3, 400) # Range of g values for plotting

# Plotting for multiple J values

plt.figure(figsize=(8, 6))

plt.plot(g_values, g_values, label='$g\\beta$', linestyle='--', color='black')

for J in J_values:

tanh_function = J * np.tanh(g_values)

plt.plot(g_values, tanh_function, label=f'${J} \\tanh(g)$')

plt.title('Graphical Solution of $\\beta g = J \\beta \\zeta * \\tanh(\\beta g)$')

plt.xlabel('$g\\beta$')

plt.ylabel('Function value')

plt.legend()

plt.grid(True)

plt.show()

Is this variational approximation good?#

It knows about the lattice and dimensions \(D=1,2,3\) only through coordinatione number \(\zeta\). e.g., for square lattice \(\quad \zeta=2 D \)

But we know that the exact solution of 1D Ising model does not have symmetry breaking: the variational result is bad in 1D. On the other hand, if \((D, \zeta) \rightarrow \infty\), you can verify \(F[p(g)]\) is identical to the exact result of the all-to-all model: it is good in large \(D\) and large \(\zeta\).

For \(D=2,3\), the accuracy is intermediate; it correctly predicts symmetry breaking but doesn’t get \(T_{c}\) or \(m \sim\left|T-T_{c}\right|^{\beta}\) quantitatively right.